Monty Hall Problem¶

Problem Description:¶

The Monty Hall Problem is a very famous problem in Probability Theory. The question goes like:

Suppose you’re on a game show, and you’re given the choice of three doors: Behind one door is a car; behind the others, goats. You pick a door, say No. 1, and the host, who knows what’s behind the doors, opens another door, say No. 3, which has a goat. He then says to you, “Do you want to pick door No. 2?” Is it to your advantage to switch your choice?

By intution it seems that there shouldn’t be any benefit of switching the door. But using Bayes’ Theorem we can show that by switching the door the contestant has more chances of winning.

You can also checkout the wikipedia page: https://en.wikipedia.org/wiki/Monty_Hall_problem

Probabilistic Interpretetion:¶

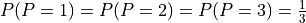

So have 3 random variables Contestant  , Host

, Host  and prize

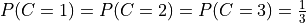

and prize  . The prize has been put randomly behind the doors therefore:

. The prize has been put randomly behind the doors therefore:  . Also, the contestant is going to choose the door randomly, therefore:

. Also, the contestant is going to choose the door randomly, therefore:  . For this problem we can build a Bayesian Network structure like:

. For this problem we can build a Bayesian Network structure like:

[4]:

from IPython.display import Image

Image("images/monty.png")

[4]:

with the following CPDs:

P(C): +----------+----------+-----------+-----------+ | C | 0 | 1 | 2 | +----------+----------+-----------+-----------+ | | 0.33 | 0.33 | 0.33 | +----------+----------+-----------+-----------+ P(P): +----------+----------+-----------+-----------+ | P | 0 | 1 | 2 | +----------+----------+-----------+-----------+ | | 0.33 | 0.33 | 0.33 | +----------+----------+-----------+-----------+ P(H | P, C): +------+------+------+------+------+------+------+------+------+------+ | C | 0 | 1 | 2 | +------+------+------+------+------+------+------+------+------+------+ | P | 0 | 1 | 2 | 0 | 1 | 2 | 0 | 1 | 2 | +------+------+------+------+------+------+------+------+------+------+ | H=0 | 0 | 0 | 0 | 0 | 0.5 | 1 | 0 | 1 | 0.5 | +------+------+------+------+------+------+------+------+------+------+ | H=1 | 0.5 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0.5 | +------+------+------+------+------+------+------+------+------+------+ | H=2 | 0.5 | 1 | 0 | 1 | 0.5 | 0 | 0 | 0 | 0 | +------+------+------+------+------+------+------+------+------+------+

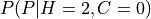

Let’s say that the contestant selected door 0 and the host opened door 2, we need to find the probability of the prize i.e.  .

.

[ ]:

from pgmpy.models import DiscreteBayesianNetwork

from pgmpy.factors.discrete import TabularCPD

# Defining the network structure

model = DiscreteBayesianNetwork([("C", "H"), ("P", "H")])

# Defining the CPDs:

cpd_c = TabularCPD("C", 3, [[0.33], [0.33], [0.33]])

cpd_p = TabularCPD("P", 3, [[0.33], [0.33], [0.33]])

cpd_h = TabularCPD(

"H",

3,

[

[0, 0, 0, 0, 0.5, 1, 0, 1, 0.5],

[0.5, 0, 1, 0, 0, 0, 1, 0, 0.5],

[0.5, 1, 0, 1, 0.5, 0, 0, 0, 0],

],

evidence=["C", "P"],

evidence_card=[3, 3],

)

# Associating the CPDs with the network structure.

model.add_cpds(cpd_c, cpd_p, cpd_h)

# Some other methods

model.get_cpds()

Finding Elimination Order: : : 0it [00:19, ?it/s]

[<TabularCPD representing P(C:3) at 0x7f580a175310>,

<TabularCPD representing P(P:3) at 0x7f58128ad520>,

<TabularCPD representing P(H:3 | C:3, P:3) at 0x7f580a175340>]

[6]:

# check_model check for the model structure and the associated CPD and returns True if everything is correct otherwise throws an exception

model.check_model()

[6]:

True

[7]:

# Infering the posterior probability

from pgmpy.inference import VariableElimination

infer = VariableElimination(model)

posterior_p = infer.query(["P"], evidence={"C": 0, "H": 2})

print(posterior_p)

Finding Elimination Order: : : 0it [00:00, ?it/s]

0it [00:00, ?it/s]

+------+----------+

| P | phi(P) |

+======+==========+

| P(0) | 0.3333 |

+------+----------+

| P(1) | 0.6667 |

+------+----------+

| P(2) | 0.0000 |

+------+----------+

We see that the posterior probability of having the prize behind door 1 is more that door 0. Therefore the contestant should switch the door.